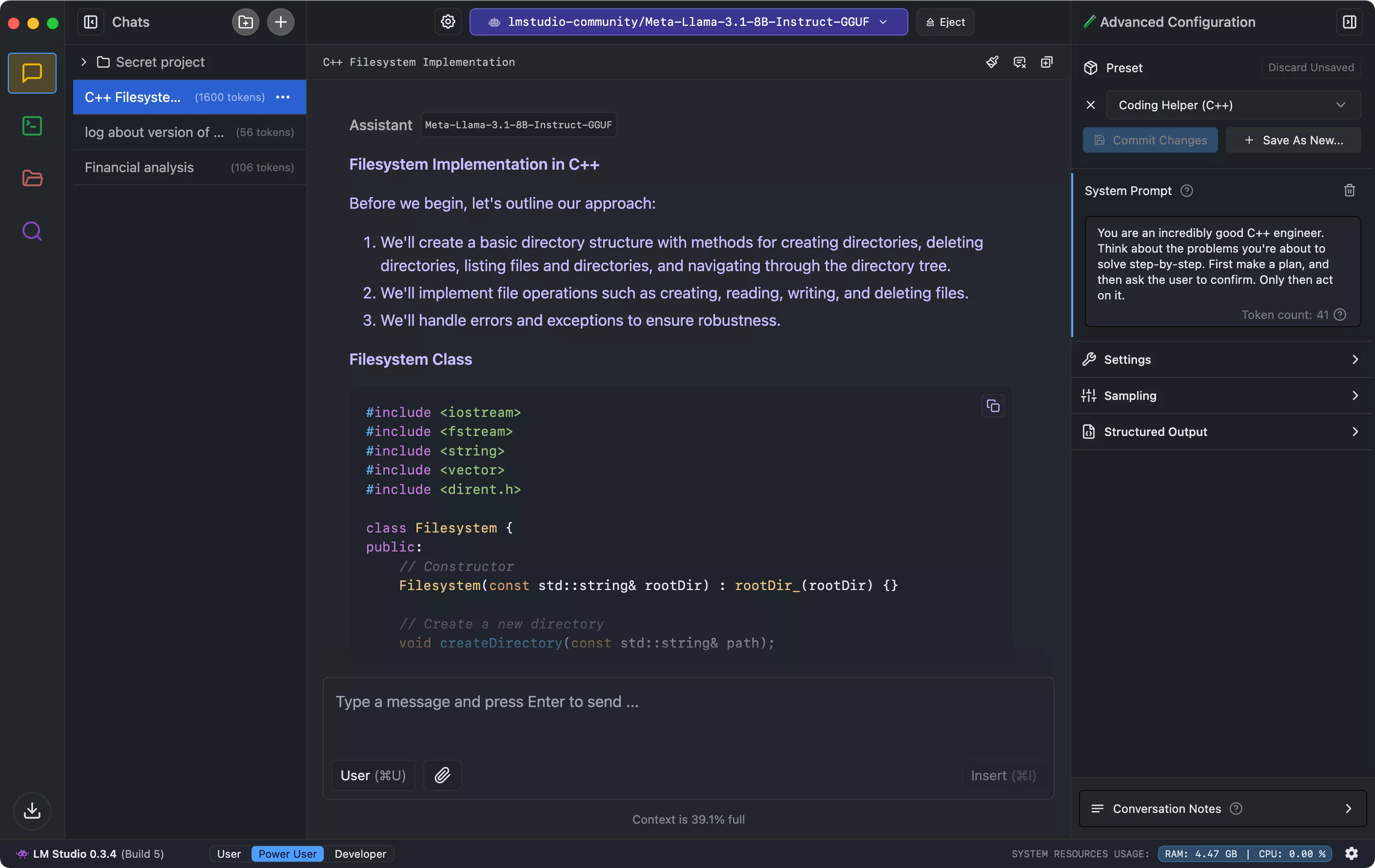

LM Studio supports any GGUF Llama, Mistral, Phi, Gemma, StarCoder, etc model on Hugging Face. LM Studio does not collect data or monitor your actions. Your data stays local on your machine. It's free for personal use. For business use, please get in touch.

With LM Studio, you can:

- Run LLMs on your laptop, entirely offline

- Chat with your local documents (new in 0.3)

- Use models through the in-app Chat UI or an OpenAI compatible local server

- Download any compatible model files from Hugging Face 🤗 repositories

- Discover new & noteworthy LLMs right inside the app's Discover page

What's New

DeepSeek R1: open source reasoning model

Last week, Chinese AI company DeepSeek released its highly anticipated open-source reasoning models, dubbed DeepSeek R1. DeepSeek R1 models, both distilled* and full size, are available for running locally in LM Studio on Mac, Windows, and Linux.

DeepSeek R1 models, distilled and full size

- If you've gone online in the last week or so, there's little chance you missed the news about DeepSeek.

- DeepSeek R1 models represent significant and exciting milestone for openly available models: you can now run "reasoning" models, similar in style to OpenAI's o1 models, on your local system. All you need is enough RAM.

The release from DeepSeek included:

- DeepSeek-R1 - the flagship 671B parameter reasoning model

- DeepSeek-R1 distilled models: A collection of smaller pre-existing models fine-tuned using DeepSeek-R1 generations (1.5B, 7B, 8B, 14B, 32B, 70B parameters). An example is DeepSeek-R1-Distill-Qwen-7B.

- DeepSeek-R1-Zero - An R1 prototype fine-tuned using only unsupervised reinforcement learning (RL)

Can I run DeepSeek R1 models locally?

- Yes, if you have enough RAM.

Here's how to do it:

- Download LM Studio for your operating system from here.

- Click the 🔎 icon on the sidebar and search for "DeepSeek"

- Pick an option that will fit on your system. For example, if you have 16GB of RAM, you can run the 7B or 8B parameter distilled models. If you have ~192GB+ of RAM, you can run the full 671B parameter model.

- Load the model in the chat, and start asking questions!

Reasoning models, what are they?

Reasoning models were trained to "think" before providing a final answer. This is done using a technique called "Chain-of-thought" (CoT). CoT is a technique that encourages models to break down complex problems into smaller, more manageable steps. This allows the model to arrive at a final answer through a series of intermediate steps, rather than attempting to solve the problem in one go. DeepSeek's CoT is contained in

When asked a non-trivial question, DeepSeek models will start their response with a

Below is output from DeepSeek-R1-Distill-Qwen-7B that demonstrates its ability to "think" to holistically answer the question "Are tomatoes fruits?" The thinking section is wrapped in

- New: DeepSeek R1 thought process will be contained in a collapsible UI element

- Added support for rendering math wrapped in \[ ... \] and \( ... \) blocks.

- Show "Incompatible" status on Windows/Linux AVX-only machines in CPU section of Hardware page

- Fixed bug where no CPU info would show in Hardware page if no LM Runtime was selected

- Fixed a bug where LM Runtimes were not bundled correctly in the Windows x86 installer

- Fixed a bug where duplicate Vulkan GPUs were displayed on hardware page

- Fixed a bug where messages in old chats won't show up.

Minimum requirements:

- M1/M2/M3/M4 Mac

- AWindows / Linux PC with a processor that supports AVX2.

Does LM Studio collect any data?

No. One of the main reasons for using a local LLM is privacy, and LM Studio is designed for that. Your data remains private and local to your machine.

Can I use LM Studio at work?

We'd love to enable you. Please fill out the LM Studio @ Work request form and we will get back to you as soon as we can.