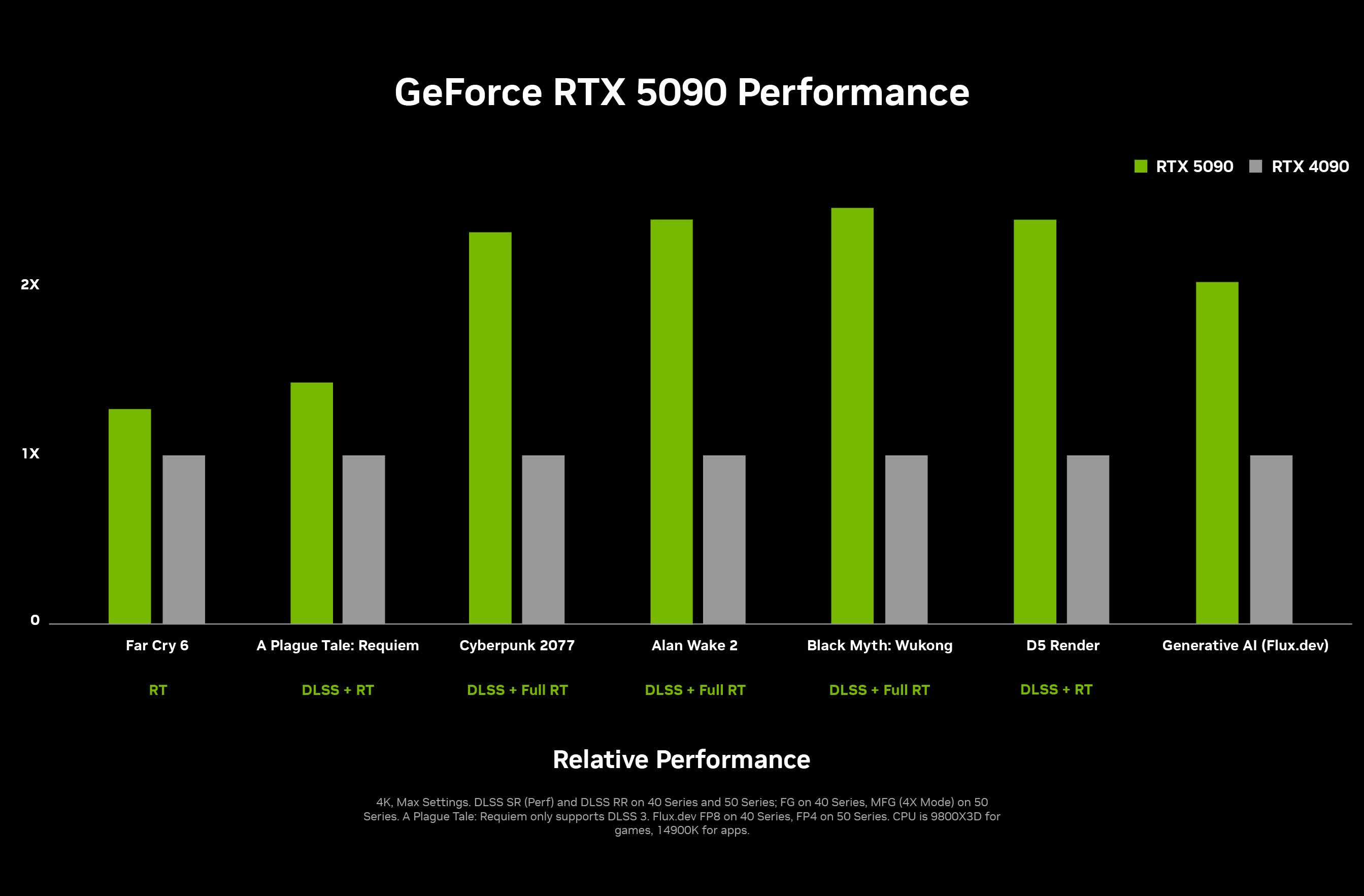

Something to look forward to: Nvidia announced next-gen RTX 50 series graphics cards earlier this month at CES 2025. While gamers are still waiting for the official launch, early benchmarks appear to suggest that the RTX 5090 will see only a moderate performance boost over its predecessor, the RTX 4090. For a full understanding of how the RTX 5090 performs in popular games, wait for our exhaustive review later this week.

Update (Jan 23): Our Nvidia RTX 5090 review is now live.

The RTX 5090 was put through its paces in Geekbench 5, where it notched up impressive scores in both the OpenCL and Vulkan tests (via BenchLeaks). In the former, the 5090 scored 367,740 points, which is 15 percent more than what the RTX 4090 achieved. In the latter, the new flagship chalked up 359,742 points, which is 37 percent higher than the RTX 4090's score.

[GB6 GPU] Unknown GPU

– Benchleaks (@BenchLeaks) January 17, 2025

CPU: Intel Core i9-12900K (16C 24T)

CPUID: 90672 (GenuineIntel)

GPU: GeForce RTX 5090

API: Vulkan

Score: 359742

PCI-ID: 10DE:2B85https://t.co/7qCqbm4cCy

In the CUDA API, the card scored 542,157 points, 27 percent higher than the 424,332 points racked up by the RTX 4090. While it is an impressive score, it's not as significant a generational leap as some had expected, given that the new card has 32 percent more CUDA cores than its predecessor.

[GB6 GPU] Unknown GPU

– Benchleaks (@BenchLeaks) January 17, 2025

CPU: Intel Core i9-12900K (16C 24T)

CPUID: 90672 (GenuineIntel)

GPU: GeForce RTX 5090

API: Open CL

Score: 367740

PCI-ID: 10DE:2B85

VRAM: 31.8 GBhttps://t.co/ExslQtwglb

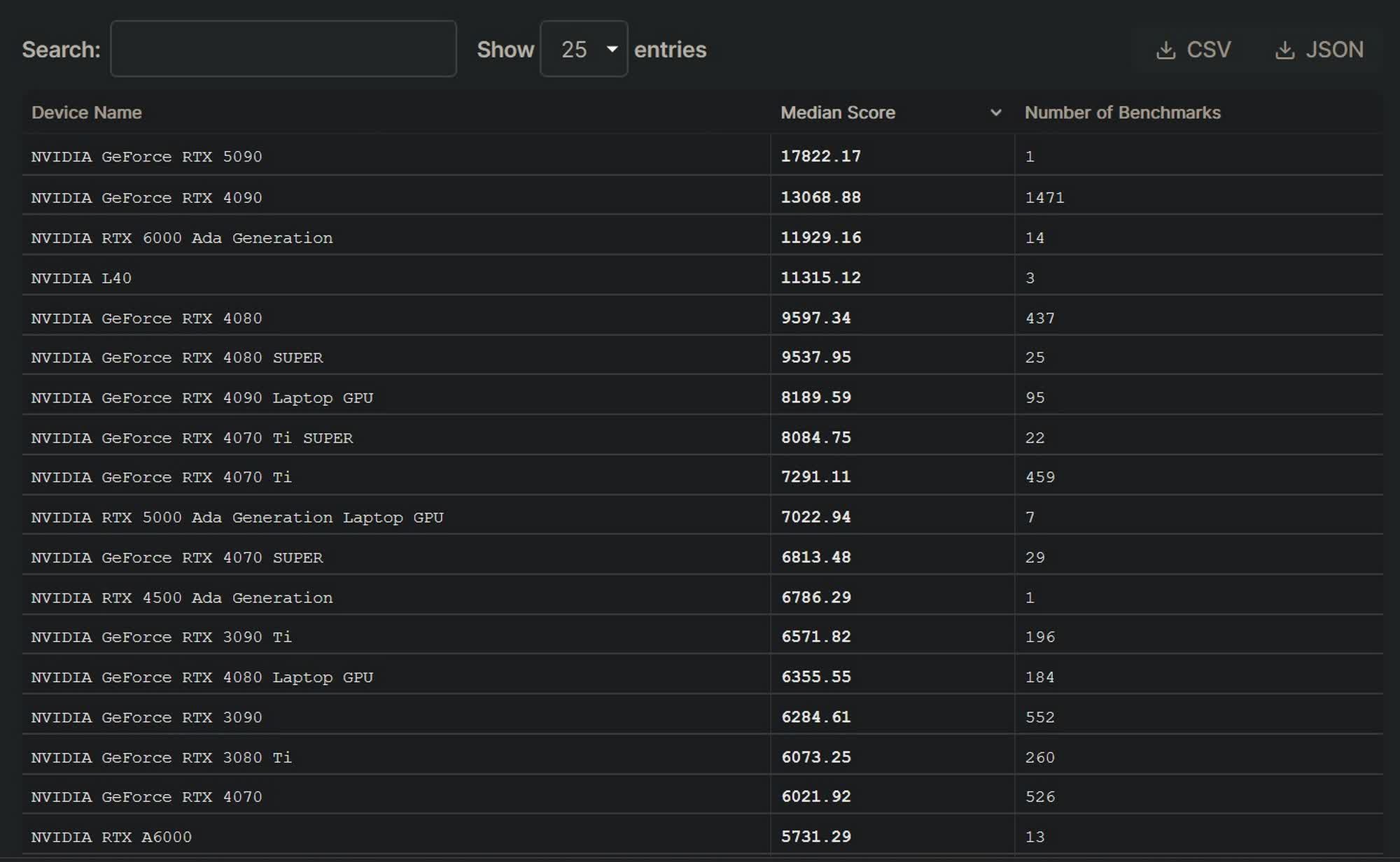

The RTX 5090 was also recently tested in Blender 3.6.0, where it notched up a median score of 17,822.17. This makes it roughly 36 percent faster than the RTX 4090, which scored 13,064.17 in the same test on the same version of the app. The China-exclusive RTX 5090D scored 14,706.65 in Blender v4.3.0, beating the RTX 4090D's score of 10,516.64 points by 40%.

It is worth noting that these scores should be taken with a grain of salt as synthetic benchmarks do not always reflect real-world gaming performance, and the Blender benchmark only shows the performance metrics in a single app.

For a better understanding of how the RTX 5090 performs in various popular games and how it compares to its predecessor, wait for our exhaustive review later this week. In the meantime, you can also watch Steve unbox our 5090 review sample below:

Alongside the RTX 5090, Nvidia also announced the RTX 5080, RTX 5070 Ti and RTX 5070 at CES 2025. The 5090 and 5080 are set to launch on January 30, while the two RTX 5070 models will likely be available next month. The new cards offer many upgrades over the Ada Lovelace generation, but they're also priced higher, with the flagship 5090 costing $1,999.

RTX 5090 early benchmarks show underwhelming performance uplift over the RTX 4090