Squid Surprise

Posts: 6,081 +5,785

That is BECAUSE:

Blackwell is not design/engineered for Games, like RDNA is.

Blackwell Architecture and the chip (GB202-300) that the RTX 5090 is based on, is not even the full die/chip. The RTX 5090 is based off a PRO card (full die) that sells for $3,499.

CUDA is not for gaming, Nvidia just overmarkets it's lack of raw power behind gimmicks to sell to Gamers, who do not do creative content, nor work in Enterprise.

The RTX 5080 is going to illustrate that^ even more, because it even small chip with even less Gaming die space than the 5090.

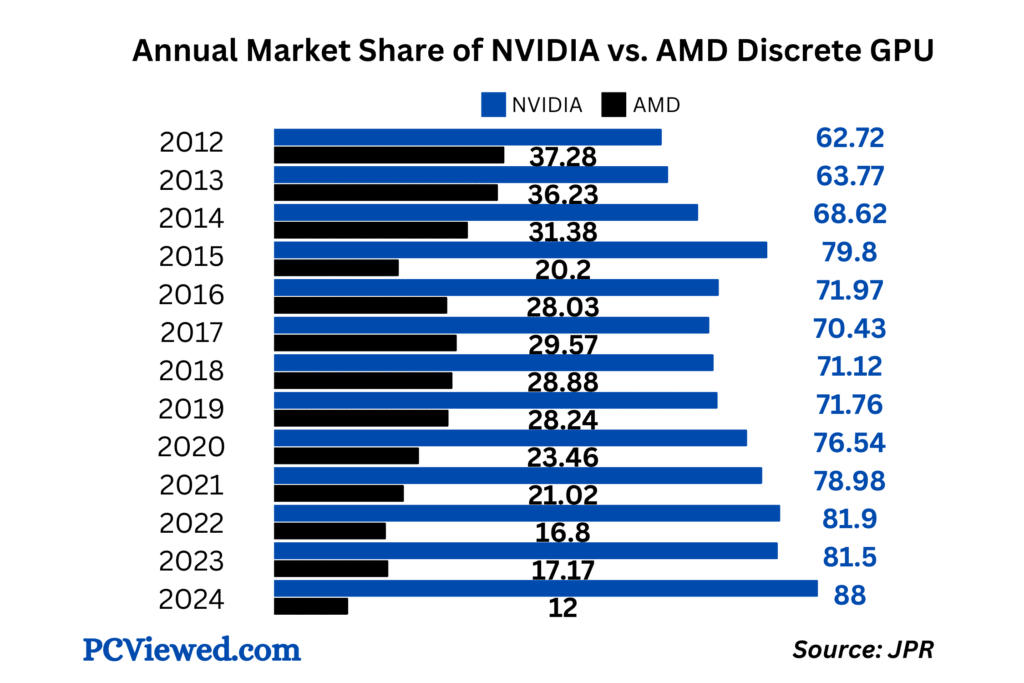

If you are a GAMER, then RTX is not for you, that is why SONY, Microsoft, Steam, etc.. all chose RDNA as the architecture choice in their gaming hardware. AMD is for gaming.

Yeah... except... AMD hasn't rivalled Nvidia for years in the high end - and has already admitted they are never going to.Don't forget that AMD is going 2 prong: Chiplet and Monolithic.

AMD is about to humiliate Jensen for trying to push so much of their non-gaming hardware off on consumers, instead of Prosumers.

The RX 9080 is going to show the power of RDNA and show price/performance/power supremacy of their Gaming Architecture.

And then later this year, AMD will announce their top-tier Chiplet architecture using AMD Prosumer XDNA architecture, allowing AMD to compete directly with nVidia's $3k "gaming card", but AMD will offer custom chiplet designs, catering to individual needs.

If u need more Ai, then choose the RX Chiplet that has more tensor/xdna cores, etc. If you want all raster, then pick up the chiplet that has your best interests at heart.

Blackwell is a joke for Gaming !

AMD sells low-performance, cost-effective cards... but they haven't been able to hold a candle to Nvidia in ages.

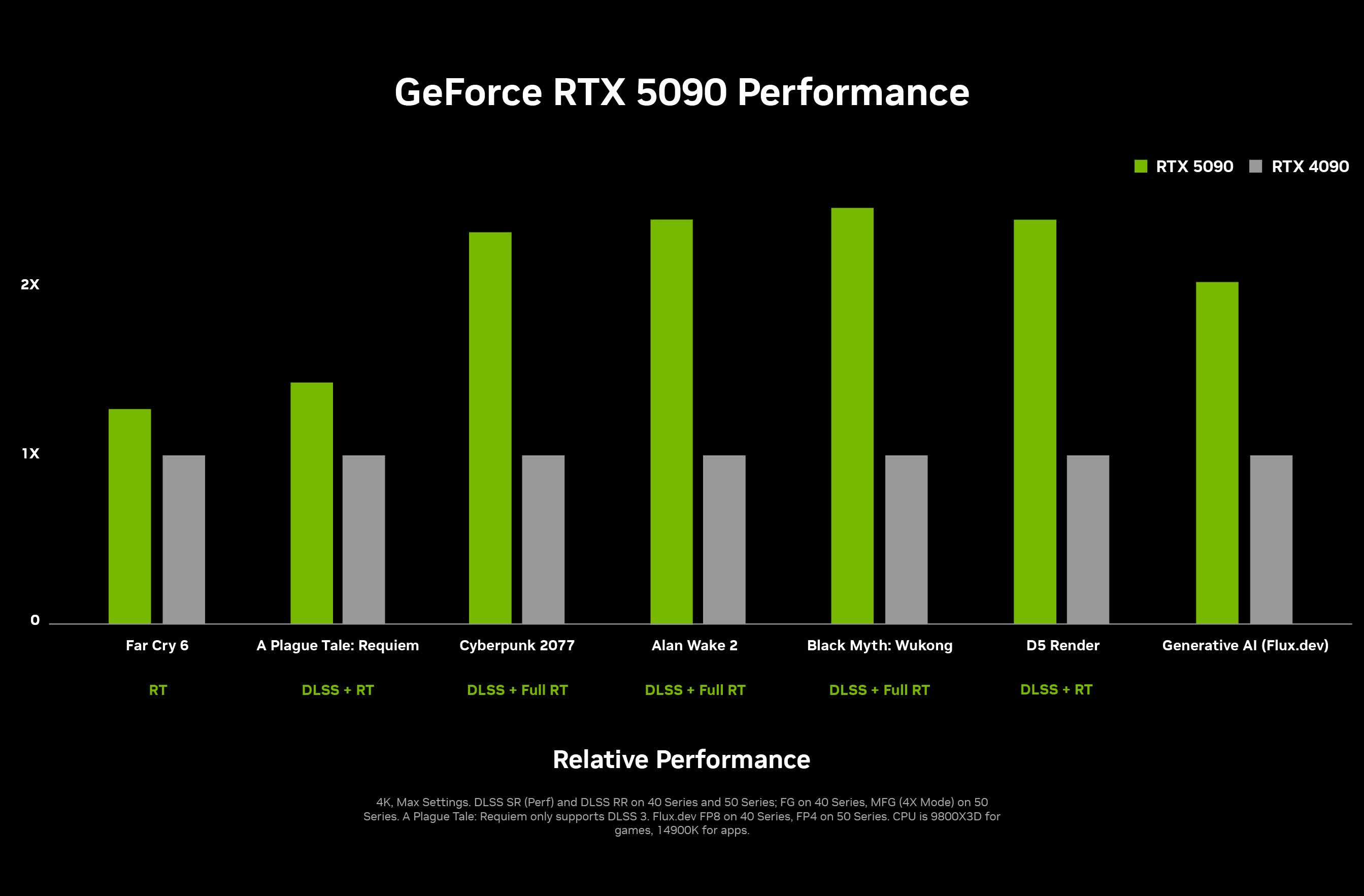

Blackwell might not have been designed SOLELY for gaming - but it still games WAY better than any AMD card you can buy - or will be able to buy in the near future.

Don't be too interested... he's an AMD shill... make sure you fact check everything he posts...Interesting take I hadn't considered, thank you. I haven't owned an AMD card since my dual HD 5670s in crossfire... and now I feel old.